How does question order impact survey responses?

Question ordering can have a significant effect on responses to surveys. Methodologists sometimes use grid format questions - which effectively show respondents multiple questions simultaneously - to obviate this. This experiment - part of our ongoing Methodology matters series - seeks to evaluate the best approach to deliver accurate public opinion data.

Introduction and background

In a previous experiment, we tested the impact of different kinds of formats on “grid” questions. In general, the results of that experiment showed that a so-called “dynamic” presentation produced higher quality data on average compared to standard grid items, where people see multiple items on the same page.

In this experiment we test hypotheses about a situation where the “standard” format might be preferred. Specifically cases, where we might expect there to be substantial “order” effects (whereby the order in which the items are displayed might significantly impact the response).

View data collection methodology statement

Experimental setup

To evaluate the impact of question order on survey response in grid questions, we fielded a survey experiment in which participants were randomized into one of four groups:

| In-party first | Out-party first | |

|---|---|---|

"Standard" grid | Group A | Group C |

"Dynamic" grid | Group B | Group D |

Respondents in the survey provided favorability ratings for the two major parties. Half of the sample answered the questions in a “standard grid” format (where they could see both parties on the same page) while the other half answered in a “dynamic” format (where they could only see one party at a time). Independent of the first condition, the order of the parties was randomized.

Respondents in the standard grid condition were shown multiple items per page in the grid setup (like the image above). Those who were in the dynamic grid condition, on the other hand, were shown each item on separate screens (the dynamic nature of the grid refers to the way that respondents are automatically advanced to the next item).

Research hypotheses

Going into this experiment, we had the following hypotheses (for additional detail, see our preregistered research design):

- Hypothesis 1: Evaluations of the parties will exhibit a strong order effect

- Hypothesis 2: This question order effect will only be present when respondents do not see both questions on the same screen

To test these hypotheses, we ran a two-item party favorability set (similar to those used in a great deal of political polling) on a national sample of 2,000 adults.

The favorability items:

- Do you have a favorable or unfavorable view of the Democratic Party

- Do you have a favorable or unfavorable view of the Republican Party

| Response | Value |

|---|---|

Extremely favorable | +3 |

Very favorable | +2 |

Somewhat favorable | +1 |

Neither favorable nor unfavorable | 0 |

Somewhat unfavorable | -1 |

Very unfavorable | -2 |

Extremely unfavorable | -3 |

Item non-response was coded at the middle position

Evaluating the hypotheses

For this experiment, we are interested in differences in the distribution of responses across the different conditions. Because of the strong relationship between individual partisanship and evaluations of the parties, we are looking specifically at in-party and out-party evaluations (e.g. an in-party evaluation would be a Democrat’s evaluation of the Democratic Party; an out-party evaluation would be a Republican’s evaluation of the Democratic Party). Because we are looking specifically at partisans’ views, we exclude respondents who report no partisan affiliation and who further refuse to lean toward either party. Democrats include all those who either say they identify with the Democratic Party or who lean toward the Democratic Party (and similarly for Republicans). Partisanship was measured in a previous survey.

Method

To test our hypotheses, we use “bootstrapping” (repeated sampling from our data with replacement) to estimate the variation that we would expect from repeated trials. This approach is more computationally expensive than classic statistical tests, but it allows us to relax some of the more stringent assumptions of the classical tests.

We generated 10,000 bootstrapped samples from the original data and calculated the test statistics for each sample. We can then look at the distribution of these statistics in a similar way that a researcher might use p-values in classical statistical tests (for example, if 9,970 of the 10,000 bootstrapped samples showed a higher value for a particular test statistic in a given comparison, we could be very confident that the result was “significant” and not just the result of expected variation).

Results

Hypothesis 1: Evaluations of the parties will exhibit a strong order effect

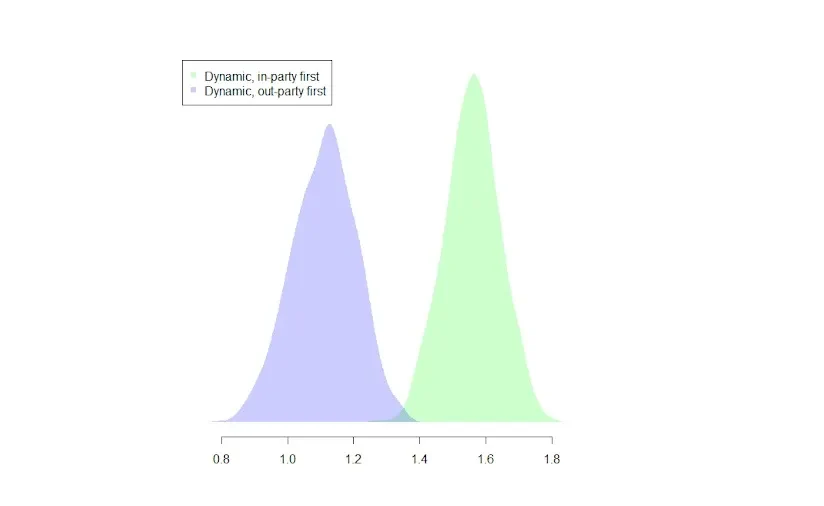

Average in-party evaluation by experimental condition among respondents in the dynamic grid condition

As shown in the figure above, the results showed a strong order effect among respondents in the dynamic grid condition on the evaluations of the in-party. The figure shows the empirical sampling distribution for each condition, and none of the bootstrapped samples showed a higher average for the out-party first condition compared to the in-party first condition. Evaluating the out-party first (negatively) induces a systematic reduction in ratings of the in-party (the magnitude is about half a scale point on average).

There is no comparable effect on out-party ratings.

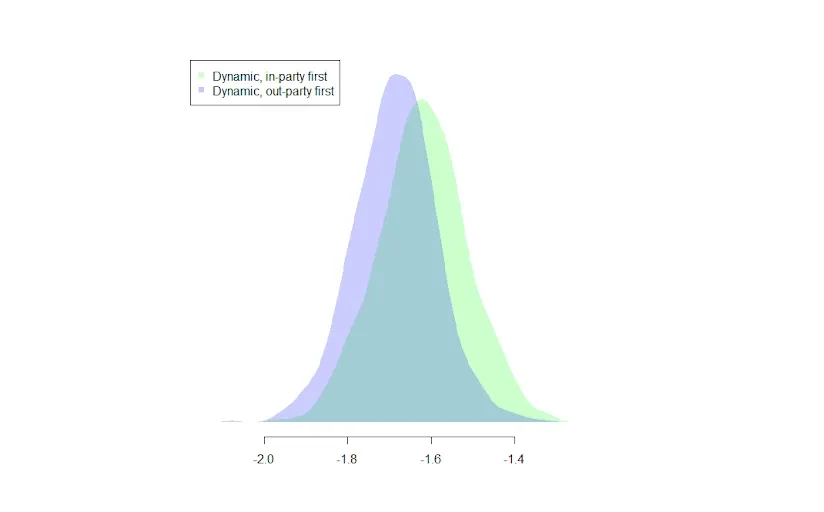

Average out-party evaluation by experimental condition among respondents in the dynamic grid condition

While evaluations of the out-party are somewhat higher after having rated the in-party, the effect here is not statistically significant (it was larger in about 80% of bootstrapped samples).

The evidence from the experiment shows a significant impact of question order among those in the dynamic grid condition.

Hypothesis 2: This question order effect will only be present when respondents do not see both questions on the same screen

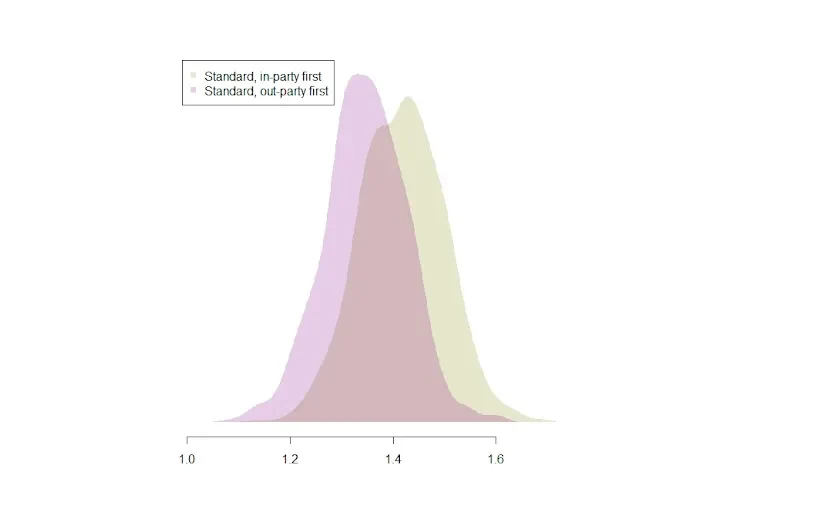

Average in-party evaluation by experimental condition among respondents in the dynamic grid condition

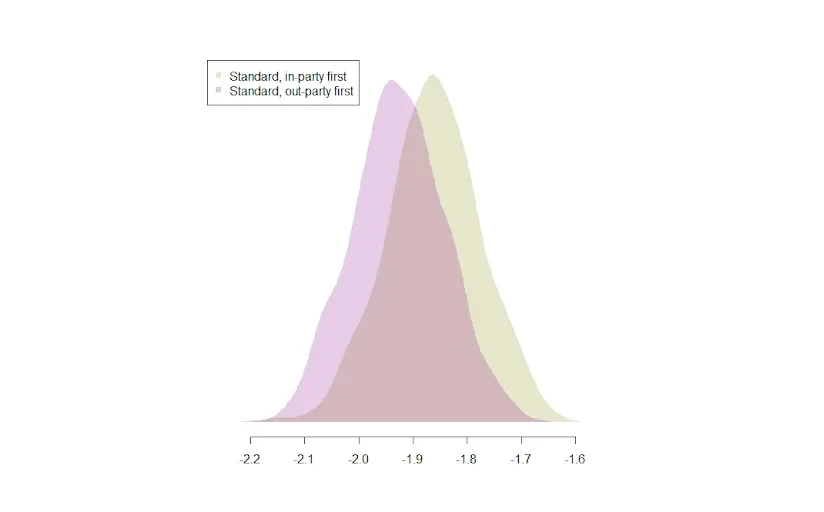

As expected, there is no statistically significant relationship between question order and the response pattern in the standard grid condition for either in the in- or out-party responses. When respondents can view both items on the same screen, the effect observed in the dynamic grid condition does not show up.

Average out-party evaluation by experimental condition among respondents in the dynamic grid condition

Effects by strength of partisanship

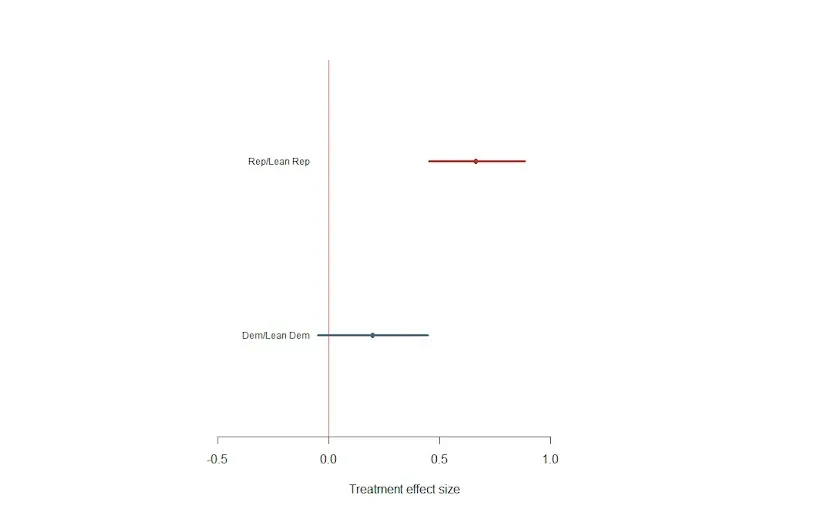

In analyses that were not pre-registered, we looked at how the treatment effect varied across different levels of partisanship. For the plots that follow, we will be looking at the magnitude of the treatment effect among different subgroups. This is specifically the in-party first versus out-party first difference. First, a look at the results by partisanship:

Republicans and Republican leaners showed a substantially larger treatment effect than did Democrats and Democratic leaners.

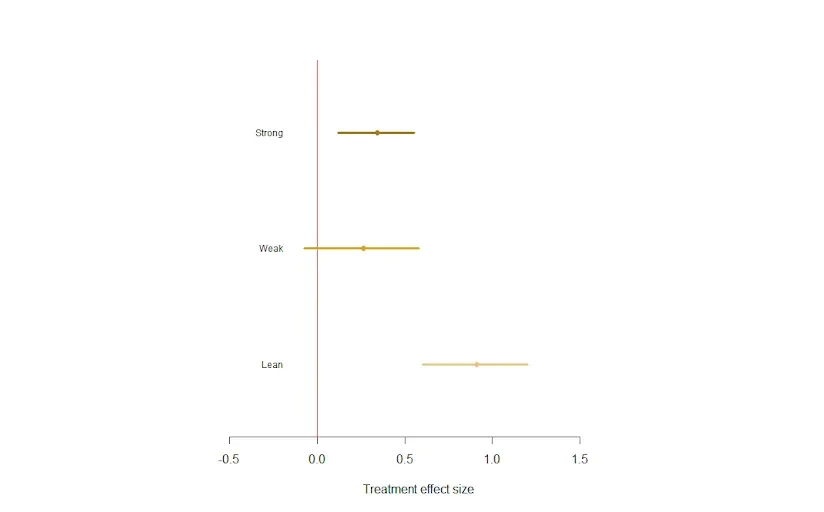

We can also break out the results by strength of partisanship:

As we may have expected evaluations of the parties among partisans exhibited less of an order effect than did those among independents who leaned toward one of the parties. This is perhaps due to more strongly held attitudes amongst partisans.

General discussion

The results of this experiment show that standard grid presentations are more appropriate when we might expect a significant question order effect. The standard grid obviates the question order effect because in practice both questions are presented simultaneously. The results here are reminiscent of experiments reported by Shuman and Presser in their classic book on survey response. In their book, they report on several order effect experiments that show a strong “norm of reciprocity” among survey respondents. Writing during the thick of the Cold War between the U.S. and the Soviet Union, they showed how views about access for journalists from the U.S. or USSR respectively varied substantially by which country was asked about first. Something similar seems to be going on in this experiment. When first asked about the out-party (where most partisans responded predictably negatively), respondents seemed to moderate their own views of their own parties.

About Methodology matters

Methodology matters is a series of research experiments focused on survey experience and survey measurement. The series aims to contribute to the academic and professional understanding of the online survey experience and promote best practices among researchers. Academic researchers are invited to submit their own research design and hypotheses.