The impact of grid format questions on survey responses

What impact do grid format questions have on respondents' answers in public opinion surveys? This experiment - part of our ongoing Methodology matters series - provides some evidence that standard grid items where respondents are shown multiple items per page produce lower quality data than alternatives. Additionally, asking too many items within a grid produces lower quality responses.

Introduction and background

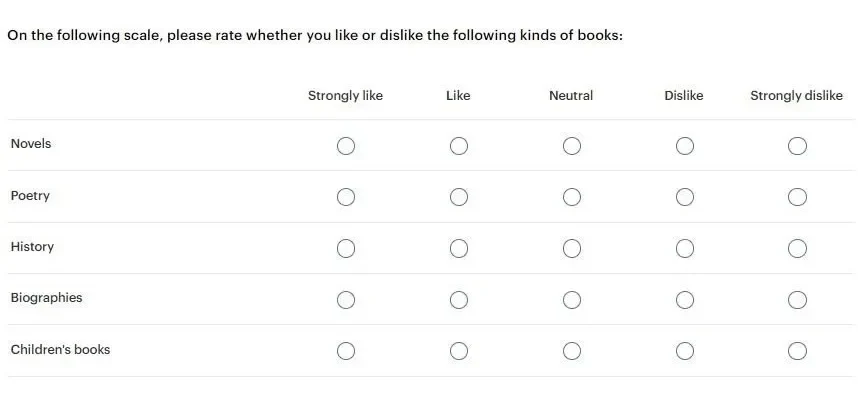

Surveys often group similarly formatted questions into “grids” (sometimes also called “matrix” items).

In theory, these grids make life a little easier on survey respondents by helping them to see that the content is related. Rather than having to jump from question to question, they can stay in the same “mode” and efficiently record their responses to a set of related questions.

It seems possible, however, that this format could degrade data quality. Perhaps by having to answer multiple questions on similar topics, respondents tire of the repetitive content and begin to tune out. It is also possible that such a format could lead to user error if respondents have difficulty navigating the grid and select a response that doesn’t actually reflect their position.

View data collection methodology statement

Experimental setup

To evaluate the impact of grid format on the survey response, we fielded a survey experiment. Participants in the experiment were randomized into one of four groups:

| 6 items | 12 items | |

|---|---|---|

Standard grid | Group A | Group C |

Dynamic grid | Group B | Group D |

Respondents in the standard grid condition were shown multiple items per page in the grid setup (like the image above). Those who were in the dynamic grid condition, on the other hand, were shown each item on separate screens (the dynamic nature of the grid refers to the way that respondents are automatically advanced to the next item).

Those respondents in the 12-item condition answered 12 questions with similar formats and on the same topic while those in the 6-item condition only answered 6. All respondents in the study were asked the same core items (see the next section).

Research hypotheses

Going into this experiment, we had the following hypotheses (for additional detail, see our preregistered research design):

H1: A standard grid format produces lower quality survey data than a series of independent questions

H2: A greater number of items produces lower quality survey data than a smaller number of items

H3: The impact of the grid format is exacerbated by the number of items

Data

To test these hypotheses, we ran the 6-item Christian Nationalism battery (featured prominently in recent scholarly work) on a national sample of 5,000 adults. All respondents in the survey answered the core items of the battery, and in the 12-item condition 6 additional items were added.

The core items:

- The federal government should declare the United States a Christian nation

- The federal government should enforce strict separation of church and state (R)

- The federal government should advocate Christian values

- The federal government should allow the display of religious symbols in public spaces

- The federal government should allow prayer in public schools

- The success of the United States is part of God's plan

The additional items:

- America's founding fathers intended for the country to be a Christian nation

- Having a national religion is incompatible with American values (R)

- The vast resources of the United States indicate that God has chosen it to lead

- The U.S. Constitution was inspired by God and reflects God's vision for America

- Religious leaders should stay out of government and politics (R)

- The United States should not give special privileges to religious groups (R)

(R) indicates items that are reverse scored

Evaluating the hypotheses

We evaluate response quality across several dimensions:

- Scale reliability - statisticians and psychometricians measure scale reliability in many different ways. For the purposes of this study we look at two such measures: Cronbach’s alpha and Guttman’s Lambda-6 (both calculated with the “psych” package in R).

- Reverse scored item - the Christian nationalism scale has one “reverse scored” item (“strict separation of church and state”). This item should be negatively correlated with the other items in the scale.

- Straight-lining - one particular concern with these sorts of items is that respondents will answer “straight down the line” with the same response to each item. We can sum up the total number of responses in the core 6-item battery that have the same response.

- Item non-response - respondents in most surveys are not required to answer every question. There is a concern in long grids that respondents might tire of supplying responses and leave some items blank. Similar to the straight-lining measure, this is a sum of the items that were left blank on the scale.

In general, we expect that the dynamic, 6-item grid will produce the highest quality data and the standard, 12-item grid will produce the lowest quality data.

Method

To test our hypotheses, we use “bootstrapping” (repeated sampling from our data with replacement) to estimate the variation that we would expect from repeated trials. This approach is more computationally expensive than classic statistical tests, but it allows us to relax some of the more stringent assumptions of the classical tests.

We generated 10,000 bootstrapped samples from the original data and calculated the test statistics for each sample. We can then look at the distribution of these statistics in a similar way that a researcher might use p-values in classical statistical tests (for example, if 9,970 of the 10,000 bootstrapped samples showed a higher value for a particular test statistic in a given comparison, we could be very confident that the result was “significant” and not just the result of expected variation).

Results

Hypothesis 1: A standard grid format produces lower quality survey data than a series of independent questions

| Test statistic | Expectation | % meeting expectation | Result |

|---|---|---|---|

Cronbach’s alpha | Dynamic format has higher reliability than grid format | 83% | Directionally correct, not statistically significant |

Guttman's Lambda-6 | Dynamic format has higher reliability than grid format | 78% | Directionally correct, not statistically significant |

Average inter-item correlation | Dynamic format has a larger negative correlation than grid format | 46% | Directionally incorrect, not statistically significant |

Straight-lining | Dynamic format shows less straight-lining behavior than grid format | 73% | Directionally correct, not statistically significant |

Item non-response | Dynamic format shows less item non-response than grid format | 99% | Directionally correct and statistically significant |

The differences between respondents in the dynamic grid condition compared to those in the standard grid condition were generally modest and not statistically significant. For several of the quality measures, the differences were in the expected direction but not significantly so (scale reliability and straight-lining). The only significant difference in the quality measure under examination here was the item non-response. In this case there was a clear pattern whereby those in the dynamic grid condition showed lower rates of item non-response compared to those in the standard grid condition. However, rates of item non-response were very low overall, and though the difference here is statistically significant it is not substantially very meaningful.

Hypothesis 2: A greater number of items produces lower quality survey data than a smaller number of items

| Test statistic | Expectation | % meeting expectation | Result |

|---|---|---|---|

Cronbach’s alpha | 6-item condition has higher reliability than 12-item | 99% | Directionally correct and statistically significant |

Guttman's Lambda-6 | 6-item condition has higher reliability than 12-item | 99% | Directionally correct and statistically significant |

Average inter-item correlation | 6-item condition has a larger negative correlation than 12-item | 17% | Directionally incorrect, not statistically significant |

Straight-lining | 6-item condition shows less straight-lining behavior than 12-item | >1% | Directionally incorrect and statistically significant |

Item non-response | 6-item condition shows less item non-response than 12-item | 96% | Directionally correct and statistically significant |

The number of items shown to each respondent was more impactful on the survey experience than was the overall format of the items (at least for these measures of quality). In general the data suggest that a smaller number of items lead to higher quality data. This is true for the scale reliability measures and the rates of non-response. However, contrary to our expectations, there were higher rates of straight-lining in the 6-item condition compared to the 12-item condition. This is perhaps due to the inclusion of more “reverse scored” items among the extra items.

In an analysis that was not pre-registered, we investigated this possibility. The item randomization in the survey allows some variation in the number of reverse scored items that were shown to a respondent prior to completing the 5 core non-reversed items that were used to calculate the straight-lining measure. There is a near linear relationship between the number of items that a respondent was shown that were reverse coded and the degree of straight-lining.

Hypothesis 3: The impact of the grid format is exacerbated by the number of items

Going into this experiment, the expectation was that the 6-item dynamic grid would provide the highest quality data overall. The results do not totally support this claim, but they are not inconsistent with it either. The 6-item dynamic grid performed significantly better than the other conditions when it comes to scale reliability (though not significantly better than the standard grid item with 6 items). There were no measures where the short, dynamic grid (Group A) performed worse overall than the other conditions.

The table below shows the average test statistics for each condition as well as pairwise comparisons. Entries in the table that have letters beneath them indicate that the measure showed significantly higher quality than the comparisons. For example, the first entry in the table shows the performance of the 6-item, dynamic grid on Cronbach’s alpha. This condition had a Cronbach’s alpha score of 0.75 and that score was significantly higher than either of the 12-item conditions (indicated by the “C” and the “D”). Significance is here defined as a higher quality score in 95% of the bootstrapped samples.

| Group A (Dynamic/6-item) | Group B (Standard/6-item) | Group C (Dynamic/12-item) | Group D (Standard/12-item) | |

|---|---|---|---|---|

Cronbach's alpha | 0.75 | 0.74 | 0.72 | 0.72 |

Guttman's Lambda | 0.82 | 0.81 | 0.80 | 0.80 |

Reverse item correlation | -0.30 | -0.30 | -0.31 | -0.30 |

Straight-lining | 3.86 | 3.86 | 3.76 | 3.79 |

Item non-response | 0.05 | 0.04 | 0.03 | 0.09 |

Timing

We didn’t include timing in the quality variables as there isn’t a straightforward mapping of how the time spent answering a question ought to relate to quality. Too little time spent with a question is an obvious problem, but too much time could indicate problems as well.

| Median number of seconds per item | |

|---|---|

Group A | 5.7 |

Group B | 6.3 |

Group C | 6.0 |

Group D | 6.6 |

In this study, participants in the short, dynamic grid condition spent significantly less time on each item than did individuals in any other condition. The median time per item was a full second faster in this condition compared to individuals in the standard grid condition who answered 12 items.

General discussion

The results of this experiment show that at least in some cases data quality can be increased by attention to question format and respondent burden. The experiment inadvertently also revealed the importance of including reverse scored items in scale construction. The Christian Nationalism scale, as used in recent work, includes only one reverse coded item. This is likely contributing to poorer data quality (although nothing in this experiment should be construed to mean that the scale is not valid).

About Methodology matters

Methodology matters is a series of research experiments focused on survey experience and survey measurement. The series aims to contribute to the academic and professional understanding of the online survey experience and promote best practices among researchers. Academic researchers are invited to submit their own research design and hypotheses.