The impact of question format on knowledge about politics and government

Political knowledge measures what people know and how they reason about politics, but scholars continue to debate the best way to measure this concept. This experimental study validates an alternative question format that allows respondents to express their level of belief certainty in their knowledge about politics and government.

Currently, the most common method for measuring political knowledge is with closed-ended multiple-choice questions, where respondents choose from a fixed number of answer choices. However, existing political knowledge scales cannot distinguish respondents based on the confidence in their answers to political knowledge questions. Consequently, scholars lack valuable information about citizens’ awareness of politics and government. The "certainty-in-knowledge" format tested here provides respondents with a meaningful way to express how certain they feel about their answers, allowing opinion surveys to better capture the heterogeneity in political knowledge among citizens. This shift in measurement can be seamlessly incorporated into questionnaires with little additional cost or survey length.

View survey methodology statement

Summary and takeaway

The analyses indicate that a question format eliciting belief certainty significantly reduces the likelihood of respondents looking up answers during online surveys compared to the multiple-choice format with a follow-up question. This belief certainty question format also impacts the observed gender gap in public opinion by evaluating the knowledge of both men and women similarly, even when a "Don't Know" (DK) response option is available. Pre-registration materials are available here: https://osf.io/dkv2u/

Experimental setup

Respondents are randomly assigned to two factors, yielding four experimental groups and a total sample of 2,161 respondents. First, respondents are randomly assigned to answer political knowledge questions using either the standard closed-ended format (i.e., multiple-choice response options with a follow-up question about belief certainty) or the certainty-in-knowledge format (i.e., knowledge alongside belief certainty response options, "all-in-one" question). The second factor randomizes the presence of a DK option (e.g., inclusion or exclusion of a DK option). Respondents are unaware of the treatment group to which they have been assigned.

Table 1: Question Wording for Political Knowledge Items

| Multiple-choice | Certainty-in-knowledge |

|---|---|

Q1. What is the term limit for members of the U.S. Congress under the U.S. Constitution?

| Q1. Under the U.S. Constitution there are no term limits for members of the U.S. Congress. |

Q2. How much of a majority is required for the U.S. Senate and U.S. House to override a presidential veto?

| Q2. A one-half majority is required for the U.S. Senate and U.S. House to override a presidential veto. |

Q3. At present, how many women serve in the U.S. Supreme Court?

| Q3. At present, four of the U.S. Supreme Court Justices are women. |

Q4. Which party has a majority of seats in the U.S. House of Representatives?

| Q4. Democrats have a majority of seats in the U.S. House of Representatives. |

Q5. Who is the current prime minister of the United Kingdom?

| Q5. Rishi Sunak is the current prime minister of the United Kingdom. |

Q6. In which of the following areas does the U.S. Federal Government spend the most money?

| Q6. Foreign aid is the area where the U.S. Federal Government spends the most money. |

Note: The bolded '*' symbol indicates the correct answer at the time of the study. | Note: The correct answer at the time of the study is italicized in parentheses. |

Table 1 shows the difference between the two types of questions and lists the question topics used in this study. The questions in Table 1 tend to focus on the structure of government and U.S. politics, topics that appear in landmark surveys such as the American National Election Studies (ANES). The multiple-choice format consists of two questions: one that asks a typical political knowledge question with four response options and a follow-up question that asks for belief certainty on a four-point scale (e.g., "Very Uncertain"; "Uncertain"; "Certain"; "Very Certain"). The certainty-in-knowledge format consists of a single question that elicits a true or false designation along with a person’s degree of certainty in that evaluation (e.g., “Probably True” vs. “Definitely True” or “Probably False” vs. “Definitely False”). When someone says, "Probably True," they believe the statement is true but also acknowledge the possibility that it might not be. Whereas when someone says, "Definitely True," they are expressing high confidence that the statement is true, with little room for doubt or uncertainty.

Research hypotheses

Respondents have the ability – through graded response options – to report how certain they feel about their answer choice. As a result, someone who is unsure about their answer has an alternative beyond a DK response. In this way, eliciting belief certainty can help reduce gender disparities in “Don’t Know" measurement. This change in question format is hypothesized to make women more willing to choose a valid response over the DK option.

Hypothesis 1: Gender differences in responding to “Don’t Know” will decrease when belief certainty can be expressed on political knowledge questions compared to the standard multiple-choice format.

The certainty-in-knowledge format is expected to decrease the motivation to do outside research by allowing respondents to admit uncertainty about the correct answer. The innovation of the certainty-in-knowledge format is that it allows respondents to acknowledge their certainty (or lack thereof) about their answer. With a more conducive survey environment for self-assessment, the motivation to do outside research should be reduced. Therefore, the certainty-in-knowledge format is hypothesized to decrease answer look-up in online surveys.

Hypothesis 2: The prevalence of answer look-up will be lower among respondents answering the certainty-in-knowledge format compared to the standard multiple-choice format.

Evaluating the hypotheses

Methods

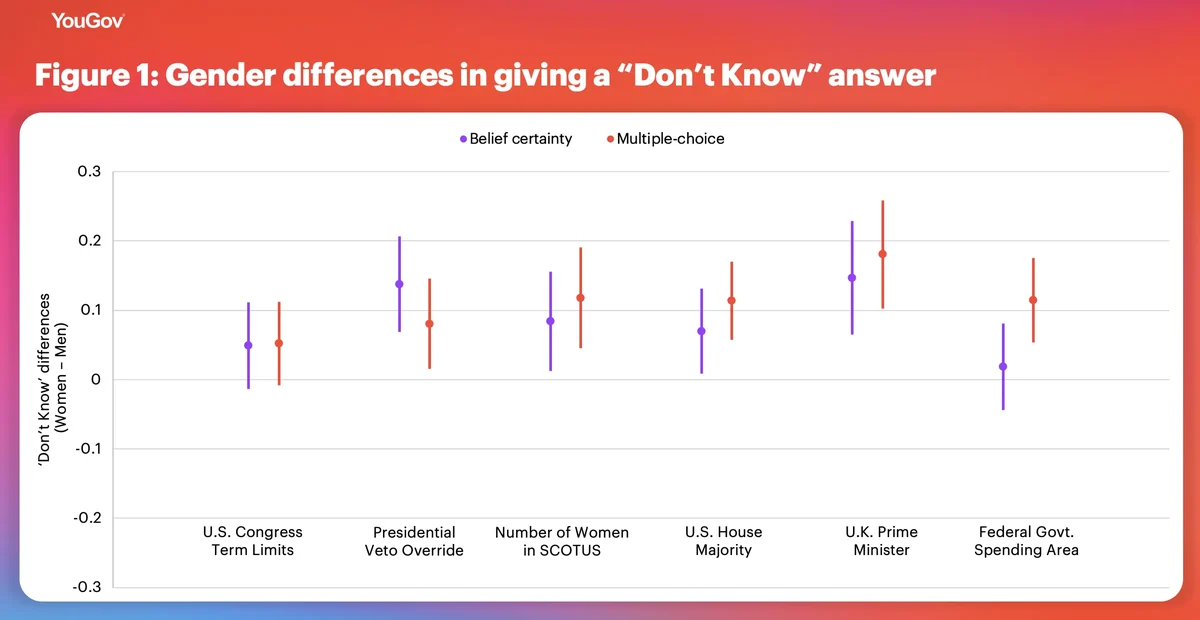

Hypothesis 1 predicts that gender differences in responding to “Don’t Know” (DK) on political knowledge questions will decrease when respondents can express the certainty of their responses. To test this, I use a difference-in-means test to compare the number of DK answers between women and men. A positive value indicates that women are giving more DK answers than men, whereas a negative value indicates that women are giving fewer DK answers than men. I also employ a difference-in-differences model to explore the effects of question format on overall levels of knowledge about politics and government.

Hypothesis 2 predicts lower answer look-up in the belief certainty format compared to the multiple-choice format. Existing methods for detecting answer look-up in online surveys include self-reports of cheating or “catch questions,” both of which provide information about answer look-up only in the aggregate. These methods can indicate how many respondents admitted to looking up answers or answered a catch question correctly, but they cannot specify which particular questions were affected by look-up. To overcome this limitation, I use a paradata method involving JavaScript code embedded in each political knowledge question, which tracks whether respondents temporarily navigate away from the web survey's browser window. This method is a more accurate and reliable way to detect answer look-up, as it does not require respondents to answer additional questions or admit to cheating.

Results

Hypothesis 1

I begin by investigating whether women are less likely to choose the DK option when they can express how certain they are of their political knowledge answers (H1). Next, I compare the presence of answer look-up across question formats using the paradata obtained from the survey (H2). The first hypothesis tests the effect of the question format change on gendered differences in DK responding (represented here as the Women – Men difference in giving a DK response). Women are more likely than men to give a DK response across items in the standard multiple-choice format (Women-DK index, Mean=0.65; Men-DK index, Mean=0.45, df=1,081, two-tailed test, p=.008). For the certainty-in-knowledge format, there is also a significant difference in the number of DK responses (Women-DK index, Mean=0.85; Men-DK index, Mean=0.56, df=1,017, two-tailed test, p=.0002).

Across items, we find a non-significant difference between men and women in only 2 out of 6 items: "U.K. Prime Minister" and "Federal Govt. Spending." Most times, the belief certainty format shows lower differences between men and women, but in one item about institutional rules, it shows the reverse pattern (i.e., "Presidential Veto Override"). Keep in mind that in the multiple-choice format, the experiment employed a follow-up question on belief certainty (i.e., branching format) after respondents gave a substantive answer, whereas the certainty-in-knowledge format employs an "all-in-one" question format. Despite the uneven number of questions, prompting respondents to report their belief in the multiple-choice format may be increasing their self-awareness of their answers' uncertainty in similar ways across the two formats, potentially leading to null results at the item level.

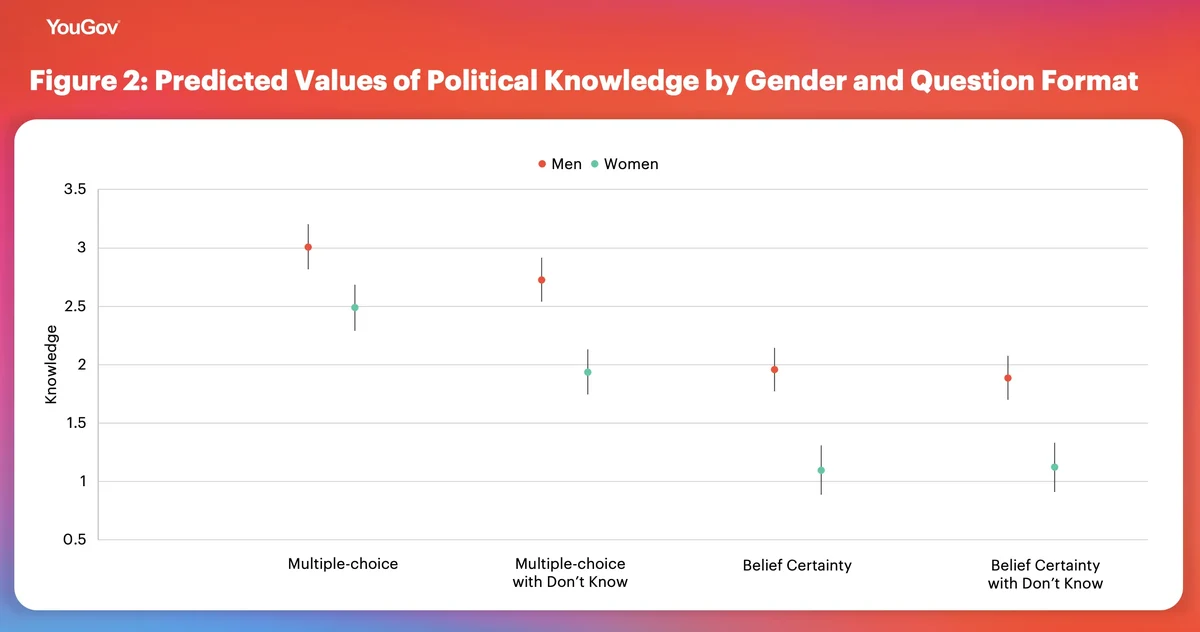

To further investigate the effects of changing question format and providing a "Don't Know" response option on overall levels of knowledge, I employ a difference-in-difference model in which the question format treatments are interacted with gender. Figure 2 presents the average predicted political knowledge values across formats. In the multiple-choice condition, the gap increases from a non-significant 0.06 (p=.06) to a significant 0.18 (p=.002) when a DK option is available. These results show a three-fold increase in the observed gender gap in political knowledge.

The certainty-in-knowledge format results show that the inclusion or exclusion of a DK option does not affect the overall levels of differences in political knowledge between men and women. With the belief certainty format, the gender gap hovers around 0.80 irrespective of whether a DK option is included (without DK option, Men M=2.05, Women M=1.25, dif=.80, p<.001; with DK option: Men M=2.03, Women M=1.23, dif=0.80, p<.001). The inclusion of belief certainty when estimating political knowledge removes the confounding influence of DK responses and does not affect the gender gap in political knowledge. Although the belief certainty format may show lower knowledge levels, it only indicates that most respondents are willing to acknowledge their lack of certainty and awareness of their ignorance. This measurement method results in a more equitable evaluation of the variances in political knowledge between men and women across a range of questions related to government and politics.

Hypothesis 2

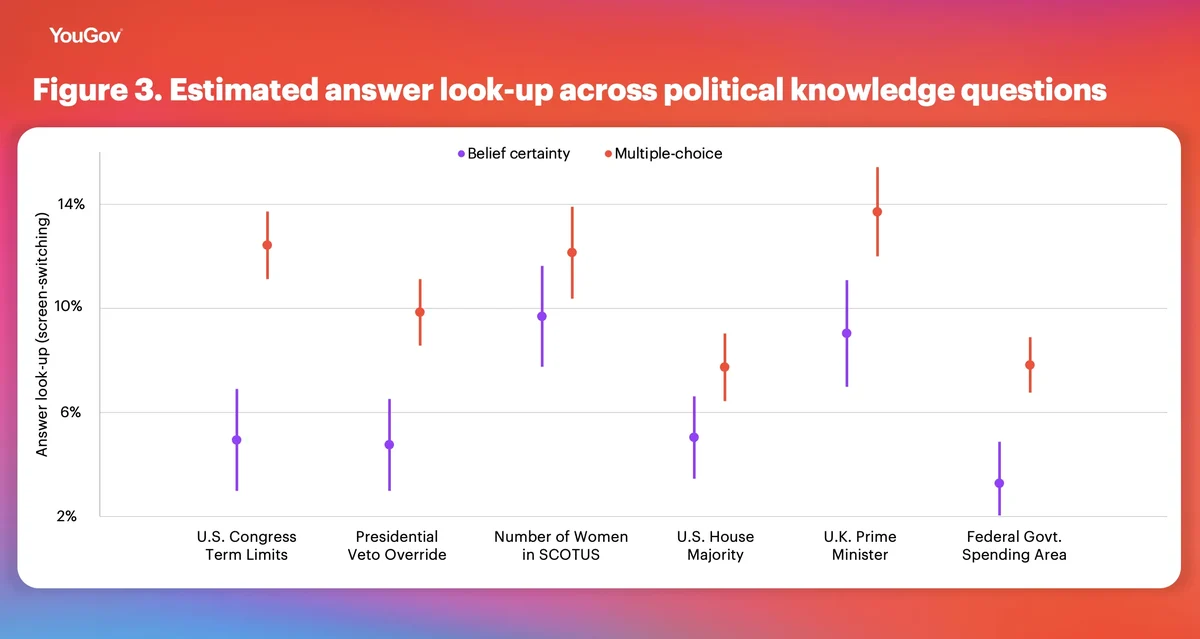

Figure 3 displays the percentage of respondents who were detected looking up answers to political knowledge questions (N=1,728 or 20% of the sample). Answer look-up is recorded at the item-level as a binary variable (1 = search, 0 = no search). Hypothesis 2 tests the differences in screen-switching across the two question formats. There is a statistically significant difference in the mean levels of answer look-up across the two question formats (multiple-choice Mean=0.64, belief certainty Mean=.37, p<.0001, df=1,941, two-tailed). Across 5 of the 6 items, the certainty-in-knowledge format (black bars) shows significantly lower rates of answer look-up in comparison to the multiple-choice format. Even with the added follow-up question eliciting belief certainty of answers, the multiple-choice format promotes more screen-switching during political knowledge. The only exception occurs on the item asking what is the number of women in the Supreme Court of the U.S. (p = .06).

Some may look up answers because they are genuinely ignorant and curious about the right answer, whereas others may look up answers because of self-enhancement motivations. Among those who were detected screen-switching, 45% report a "Don't Know" answer, this requires further examination to explore what motivates respondents to look up answers in political knowledge surveys. To understand the characteristics associated with screen-switching, I estimate a regression model that includes gender, income, education, age, mobile device, partisanship and question format. The dependent variable ranges from 0 (no knowledge items searched) to 6 (all knowledge items searched).

The linear probability regression results in Table 2 confirm that the belief certainty format significantly decreases online look-up after controlling for relevant covariates. This provides evidence that the certainty-in-knowledge decreases the motivation of respondents to look up answers on political knowledge questions. The model also shows that younger and higher income respondents are more likely to engage in outside search. Surprisingly, there are no significant effects on answer look-ups from education, type of device (i.e., mobile vs. desktop) or when the 'Don't Know' option was available.

Table 2: Linear regression model of answer look-up

| Answer look-up | |

|---|---|

Certainty-in-knowledge | -0.25*** (-0.07) |

'Don't Know' option | -0.06 |

Gender (Women) | -0.02 (0.05) |

Age | -0.01*** |

Family | 0.02* |

Partisanship | 0.008 |

Education | 0.01 (0.02) |

Mobile | 0.004 |

Constant | 1.10*** (0.14) |

F-statistic Adjusted R2 | F(12.15, 8) .04 |

df | 1,925 |

Note: Entries are linear regression coefficients and standard errors. The dependent variable is coded “1” if it was detected that the respondent left the survey active window.

*p < 0.05; **p < 0.01 ***p<.001

General discussion

The experimental results demonstrate that it is possible to capture a person’s expressed certainty in their knowledge and that there are compelling reasons to make this change. The result from Hypothesis 1 testing shows evidence that the certainty-in-knowledge format limits the degree to which women underperform relative to men on political knowledge questions when there is a DK option. The second result from Hypothesis 2 finds that the certainty-in-knowledge format significantly decreases the number of respondents who look up answers to knowledge questions compared to the standard multiple-choice format, even when a follow-up belief certainty question is available. Notably, answer look-up is more than halved in the certainty-in-knowledge format. This finding is valuable for public opinion researchers who seek to measure political awareness, as opposed to online search proficiency.

About the author

Robert Vidigal is a Statistician at the LAPOP Lab, Vanderbilt University. He was previously a Research Data Scientist at NYU's Center for Social Media and Politics and a Ph.D. Candidate in the Department of Political Science at Stony Brook University. He worked on the CSMaP independent panel project, which monitors discussion of political topics, media consumption, and the spread of disinformation, in both English and Spanish, in the lead up to the 2022 U.S. elections.

About Methodology matters

Methodology matters is a series of research experiments focused on survey experience and survey measurement. The series aims to contribute to the academic and professional understanding of the online survey experience and promote best practices among researchers. Academic researchers are invited to submit their own research design and hypotheses.